onJun 11, 2023

onJun 11, 2023

Traditionally a trusted communication channel, phone calls are becoming increasingly abused by spammers and scammers in recent years [1], [2]. Phone scams remain a difficult problem to tackle due to the combination of protocol limitations, legal enforcement challenges and advances in technology that enable attackers to hide their identities and reduce costs. Scammers use social engineering techniques to manipulate victims into revealing their personal details, purchasing online vouchers or transferring funds to attacker controlled accounts [3]. This has led to an estimated $400 million in losses to Australians in 2020 alone! [4]. The problem is not limited to the public sphere and there is a growing number of scam calls directed at corporate call centres [4], [5].

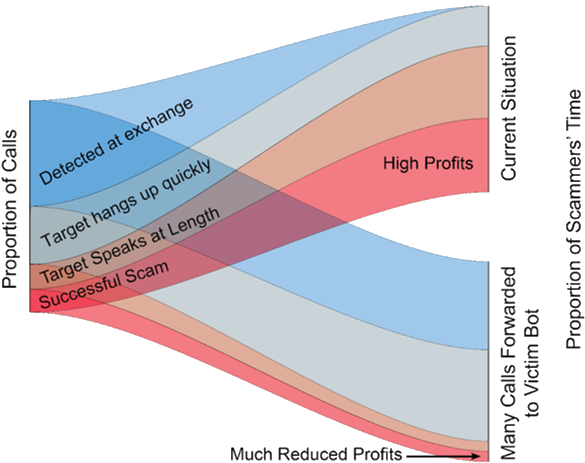

Currently the only strategies used to fight public phone scams are simple heuristics used by telecommunication service providers to filter scam calls at the exchange and advice to the public to be wary of unsolicited calls. Neither of these approaches is effective, so that scam calls continue to be a significant public menace. Apate presents a novel approach to defeat phone scam operators through breaking their business model and making their operations unprofitable. This is achieved through the development of conversational AI bots that present as a convincing potentially viable scam victim. To this end, Apate is:

Researching scammer methods and techniques through analysis of collected scam call data.

Building conversational AI bots capable of keeping real scammers on the line for a considerable period of time.

Developing improved scam detection both at the exchange as well as detection early in a call (integrated into a mobile phone app) through collection and analysis of large scale scam call data.

Developing 3 practical methods to deploy the bots: a telephony honeypot (a collection of phone numbers that connect directly to a bot), redirection of scam calls detected at the exchange, and a mobile app whereby users can redirect scam calls to the bots.

The core of our innovation is the development of conversational AI bots that are capable of fooling scammers into thinking they are talking to viable scam victims, hence causing them to spend time attempting to scam the bots.

Recent advances in Natural Language Processing (NLP) and conversational AI in particular have led to AI agents capable of fluent speech, adopting personas and tracking within conversation information. Recent advances in voice generation technologies have enabled convincing “voice cloning” that is hard to distinguish from real voices. Together, these advances now provide the ability for a bot to conduct conversations over the phone and appear convincingly as a human interlocutor.

Such bots can often be quickly identified as non-human in normal conversation due largely to their limited ability to track conversational context, despite recent advances that have dramatically improved this ability [6], [7]. This is mitigated by the motivation of the scammer to pursue a victim until payment (so long as they have a suspicion that it is not a bot, they will likely stay on the line) and the fact that many of the truly vulnerable are easily confused by complex processes, which is similar to the way bots may appear if they fail to properly integrate and respond to information provided by the scammer. This is evidenced by “Lenny”, a simple bot consisting only of pre-recorded phrases that play in a loop irrespective of the words of the scammer. Lenny was able to achieve an average call length of around 5 minutes over 20,000 calls with scammers [8].

With scale, our bots will dilute the market and leave no room for scammers to steal from legitimate victims. What’s a scammer got to do when nine tenths of their calls lead to talking to a bot for 10 minutes? Less successful scams = less scammers